Last weekend was The Big Yak – there’s a post about the full event over on the Redcatco blog and a round-up on the IC Crowd site. For the second session of the morning, I facilitated a session on surveys and feedback, or as Katie Marlow put it: “Evaluation & feedback in the clown room, separating the jokers from the realities.” – Surveys are both under-used and misused as a form of communication. This post is some structured thoughts, based on the key points that came up during the discussions.

Last weekend was The Big Yak – there’s a post about the full event over on the Redcatco blog and a round-up on the IC Crowd site. For the second session of the morning, I facilitated a session on surveys and feedback, or as Katie Marlow put it: “Evaluation & feedback in the clown room, separating the jokers from the realities.” – Surveys are both under-used and misused as a form of communication. This post is some structured thoughts, based on the key points that came up during the discussions.

Surveys and Polls

There is a continuum of feedback gathering, from informal comments (either verbal, or on-line), through simple polls, up to multi-page, stringently designed survey instruments. Many businesses make use of formal employee engagement surveys, while others use simple one question polls into their intranet homepage. There is little substitute for a well designed survey. It can often take a number of iterations to refine question wording, especially in environments where English is not the first language, and get a quality working survey. However, sometimes a quick poll can be useful for gauging reactions to a new policy or piece of communication. Understand what sort of feedback you need, and pick the appropriate tool.

Real-time or regular?

There is certainly an advantage in capturing feedback while it is fresh in the moment, however regular, consistent surveys provide a way to track progress and adjust for biases in the responses. Many users of SurveyOptic conduct before and after surveys, or regular quarterly or annual surveys. Repeating the same survey enables them to measure the effectiveness of training and other initiatives. The timing of employee surveys is important, as the results can be biased by recent or upcoming events – for example a recent restructuring or an upcoming review. Regular surveys require subtlety different design than one-off surveys, as the same questions need to be re-usable potentially multiple years down the line.

You Would Say That, Wouldn’t You?

You Would Say That, Wouldn’t You?

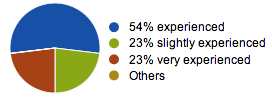

Beware of biases in your survey responses. Firstly, there is the issue of response bias. Different cultures (both country and company) will answer questions with their own particular biases. Repeated surveys will give you the data to factor this out. Be careful of sample bias too. How did people get the survey? Who selected them? What percentage of people completed the survey (non-response bias)? It is often tempting to reward people for completing the survey, but with smaller surveys, this too can create a sample bias. Incentives can be good, but they select one psychographic or another.

Transparency – feeding back the feedback

Do you share the results of the survey? It may not be appropriate for every survey in every environment, but when it comes to feedback, showing all results, even the negative ones, is critical to building trust and buy-in. The act of sharing the feedback demonstrates listening, and is the first step in responding to and actioning the feedback. It also provides an opportunity to validate the feedback that has been received. There is aspect of transparency that is import for surveys that gather feedback: responses are always biased by people’s trust in the anonymity of the results. Great care needs to be taken with the collection of results, and especially with the use of verbatims. Working with an external partner will increase people’s trust in the process, both that confidentiality will be preserved, and that all of the feedback will be shared with the management team. These are both things that will drive up response rates.

Listen, Respond, Act

Worse than not listening, is collecting feedback, sharing it, and then not acting on it. Have a clear plan to listen to the feedback, share and respond to the points raised, and then to act on it. That should include negative or ‘challenging’ responses too. People will decide if their feedback is taken seriously by the changes they see as a result of it. If staff can see real changes, they will value the act of completing survey.

Get to the story

As with all data, it’s important to get to the narrative behind it. What is the underlying story? Even with a 97% response rate, that still means 3% didn’t have their say, and they may be the ones sitting on issues. Bystanders are important, so don’t just survey the obvious suspects. Back up quantitative surveys with qualitative input – feedback groups, focus groups and interviews. When we design surveys, we will usually start with some focus groups or interviews, to test and shape survey questions. Similarly, building in a selection of open-ended questions helps to round out the picture painted by the numbers.

With thanks to: Katie Marlow, Keith (@icnow), Jessica Roberts, Ramat Tejani, Lisa John, Zoe Mounsey, Corrinne Douglas, Krishan Lathigra, Andrew Hesselden and Corrinne Douglas whose tweets provided many of the points for this post. If you have any questions about surveys, SurveyOptic, or gathering feedback, then do get in touch, or add a comment.