A conversation at the recent Dell B2B event at Google’s UK HQ, and a subsequent blog post, have finally prompted me into writing down some of my thoughts around the current trend of scoring influence, and the related social metrics industry that is being birthed out of both the US and the UK.

The question of measurement is an interesting one. My original engineering background lead me to believe that anything can be measured, and that certainly seems to be the view that prevails across much of the computer programming world. My move into marketing quickly taught me that actually you couldn’t measure many of the things you needed to measure, and even when you could, the measurement was often so far after the fact as to be (at least commercially) useless.

Test Me On This

More recently, adventures in designing and carrying out psychology experiments has helped me realised that you can actually measure things that don’t exist, and that you can’t measure many things that do exist. Now, this isn’t new news to any theoretical physisists out there, but it is something that many in social media haven’t yet figured out.

Measurement has long been a central tenet of the natural sciences. Come up with a hypothesis, then devise an experiment that involves measuring something that hopefully doesn’t disprove it (or the null hypothesis). Weights, heights, speeds and hundreds of other metrics have been constructed and calculated to enable us to describe and detail things in the physical world. However, this central tendency towards measurement is far from natural, and at times quite unscientific, when it comes to human beings.

We’ve Been Here Before, Haven’t We?

Applying behavioural measurements to human beings has a long history, and while Klout, Peerindex and Kred are wonderfully new and shiny (although increasingly less shiny in the case of Klout), they are the second cousins, once removed, of psychometrics – the scientific art of slapping a number on a human being. It is a science that is so problematic that there are not only shelves of books about it, there are also whole books written just about how problematic it is. Many of the thoughts here are inspired by “Putting Psychology in its place” by G. Richards, but most texts on psychometrics touch on the issues I’m going to raise. As I’ve read a fairly large number of them over the last 10 years or so, many of the sources have merged into an amorphous blob in my head, so I’m not going to pretend that any of what comes next is very original thought.

Just Because You Can Measure It…

…Doesn’t mean that it exists. One word: Reification. It is possible, simply be measuring something, to bring it into being. This isn’t some weird mystery taking place, it is an epistemological phenomenon that unfolds around the world of the natural sciences. If I create a “flumpy” score for humans, devise a scale for measuring “flumpiness”, and a tool for assessing a “flump” score for each of my friends, then I will have a repeatable, ‘scientific’ and objectively valid measurement. That’s even though there is no real-world correlate for ‘flumpiness’ – although my spell checker seems to think it is frumpiness, that is by the by. Now, if I can get people to believe that people with high degrees of flumpiness are more loyal customers, and should be given higher discounts, then my work is complete. The customers get their discounts, they become more loyal, I measure their flumpiness to prove how effective a predictor it as, and I have myself a multimillion dollar industry.

You’ve Got to be Objective?

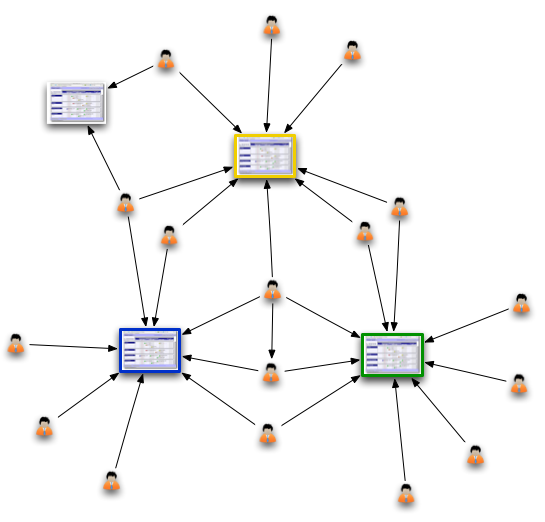

Measurements, including those in the social media world, have to latch on to externally observable phenomena, from number of followers to the propagation of messages. These are the linga-franca of the natural sciences, and they are the only objective measures that we have. But, and this is a very big, ugly but, behavioural measures such as influence are inherently individual and personal measurements, and thus they they belong to the subjective domain. They are concerned with the inner worlds of individuals. These are worlds that will be the last to be explored by mankind, and according to Socrates, the least explored by man.

If we hardly know what is going off in our own minds, how can we understand what is going off in the minds of others? Think about the last product purchase you made. Why did you make it? No, really, why did you make it? What was the chain of micro decisions and chance happening that lead you to purchase product X rather than product Y? How many things and people influenced your decisions along the way? And that’s just the ones you were consciously aware of. Many more will have crept in subconsciously.

The task facing Psychology once it moves beyond simple phenomena like reaction times has been identifying overt, publicly ‘measurable’, indices of the essentially inaccessible phenomena it seeks to study such as memory, motivation, thinking, imagery, the structure of personality and intelligence.

Thinking Is…

Rearranging our current prejudices? Right now, that’s pretty much what all of the social media influence metrics I have seen are. The assumptions (which is just a nice way of saying the prejudices) of some well-meaning individuals, projected onto available metrics (which may or may not correlate with ‘flumpiness’). If someone constructed an experiment that has predicted someone’s influence, then measured the actual influence on someone’s real world behaviours, then I missed that blog post. Even if they had, then they are at the start of the 100+ year journey that has lead psychology to an on going set of experiments, debates and hypothesis about what are and are not valid psychometric instruments (probably not Myers-Briggs, maybe 16-PF, possibly OCEAN/Big 5).

One Thing I Know

All that said, those that were in the room, or that followed the first link in this post, will remember that I said “yes” I did think that there could be a single measure of influence. The trick is in the domain-specificity of that influence. Could you construct a measure of the likeliness that I might retweet a link on a specific topic, on a specific day and time? Yes, you absolutely could. It also probably wouldn’t be valid in a few years time, or possibly even a few week’s time, as my interests wax and wane. Oh, and of course, it would just be a probability – you have a measure that gives you “quite likely” – it is not “will” or “won’t”. The measure will also have an error range, which will be a very large one if the -/+50% changes in Klout scores are anything to go by.

On That Subject

Of course, this new shiny measure wouldn’t be valid for a different topic (I don’t think I’ve retweeted much on knitting recently, although I did tweet something about knitting QR codes!). One of the lunch-time games in the office, which has lead to much hilarity, is seeing what topics we are apparently influential for. Apparently, we have expertise in social media (of course), jam (don’t ask), toothpaste (I said, don’t ask) and … You get the idea. Computer algorithms for assigning opinions to categories are a fine art, and even getting groups of humans to do it reliably is a regular form of intense frustration in psychology studies.

You Might Be Lucky

If a narrow, transient and probabilistic measurement with a wide margin of error is what you are after, then your luck may be in (no pun intended). Given that people sort CVs by the number of pages, or the hand writing on them, then using influence scores to hand out favours and goodies is probably no greater crime against humanity. Just be aware of the dice that you are rolling.

I do believe that everything can be measured. However to measure something, we need to ascertain all the variables which are relevant and then develop a machine which can accurately determine a value for those variables…that’s the fun bit!

Hi Rob! Thank you for the comment – that’s definitely a classic positivist approach, and the mindset that we are educated into in the scientific tradition in the Western World. It’s very situated though, both historically and geographically, and a view that frays at the edges. If you think about quantum physics, even within the positivist mindset, you can see where it starts to breakdown: If we can’t say, with certainty, where something as simple as an electron is at any given moment, how can we measure something as complex as an opinion? Scientific philosophers have wriggled around this in various ways (for example instrumentalism), while others, like Kant, discounted psychology from the natural sciences model, specifically because of these difficulties in measurement.

We like to measure things, because, at least subconsciously, we feel that if we can measure them, then we feel we can control and manage them. It’s a paradigm that sprawls across the corporate world. To admit something can’t be measured almost bankrupts our worldview. The reality is that most management dashboards are an elaborate exercise in retro fitting, and actually the number of variables involved in any real world situation is so huge as to make the capture of them untenable. And that is before we even get into the qualitative versus quantitative debate, a can of worms I didn’t even open here. Integers may seem to rule the business world, but when it comes to individual decision making, almost all of the modern evidence points to the primacy of emotional (qualitative) factors.

In our work here, we see this divide on a daily basis. Milestone Planner, and the sea of data it creates, show that we can measure project progress in hard numbers, such as the number of milestones or actions completed or outstanding. We can derive and calculate interesting things like the number of days of backlog, project velocity and all sorts of project metrics. However, if an individual completes a task or not isn’t to do with any of those numbers, it’s down to their ‘motivation level’ (there’s a 50 year research project – and no, it isn’t a number either ;) ), the distractions and barriers that get in the way, and a thousand other things that even the most sophisticated technology is never going to be able to capture process and predict. Down at the neuropsychological level, it might even be the momentary concentration of a protein from the chocolate bar eaten at lunch that tips the balance! So, the numbers are useful (and we believe, valuable ;) ) indicators, but they aren’t generalisable, and they aren’t deterministic.

Terrific post. You sent me to the dictionary more than once–always a good thing.

I’ve ranted on this at SocialMediaToday. I’m less eloquent, but focused more on “conversion:” http://bit.ly/uYsewz

Reification, indeed. It will be intriguing to see what shakes out in the coming weeks now even the New York Times is on the case.

Really interesting stuff drawn from a wide range of sources. Social influence measurement does feel flakey at best, just as machine read sentiment scoring does. As a softer measure and guide to what is more likely to work, it is often a good starting point. Putting a number on influencer value should be more useful, interesting and predictable over several interactions or test cases, listening to responses/watching effects on KPIs.

Hardly an exact science, but a case of test and learn. With so many variables in play, influencers could then still be seen as useful if nothing else.